Vision SDK

Location with visual context

By: Eric Gundersen

New Mapbox blog posts are now published on www.mapbox.com/blog. Leave your email to receive email updates when new posts are published or subscribe to the new RSS feed.

At Locate we’re introducing the Vision SDK — bridging the phone, the camera, and the automobile to give developers total control over the driving experience.

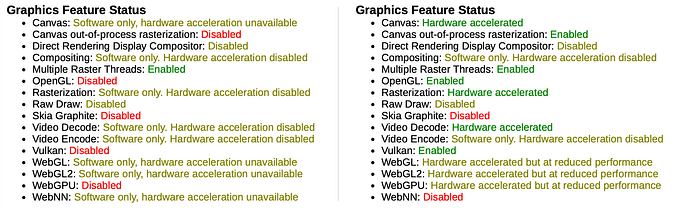

The Vision SDK works in conjunction with our live traffic and navigation, allowing any developer on iOS and Android to build heads-up displays directly into their apps.

Equipped with better navigation, paired with augmented reality, and powered by high-performance computer vision, the SDK turns the mobile camera into a powerful sensor — developers have the key to the car.

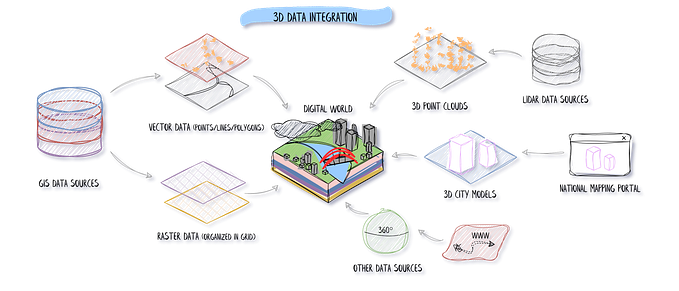

Running neural networks directly on the mobile device enables real-time segmentation of the environment. The SDK performs semantic segmentation, discrete feature detection (like spotting speed limit signs and pedestrians), and it supports augmented reality-powered navigation.

As developers use the Vision SDK, they not only get live context directly on the edge but also access to anonymized, real-time data. Hook into events from the Vision SDK, read the data, and control what data to send back.

As part of this launch, we’re also announcing our partnership with Microsoft Azure. Azure’s IoT Edge Runtime is completely open source, allowing developers to process events on the edge and stream incremental data updates to the cloud.

For true live location, we need to reduce latency — the split-second decisions you make when you drive are critical. Optimizing the Vision SDK to the sensors and chips inside devices achieves real-time data processing with extraordinarily low latency. It’s this level of detailed hardware optimization that will give us the performance we need for true live location.

On the hardware side, we’re collaborating with Arm on low power optimization that will bring the Vision SDK to tens of millions of devices and machines. Arm is the company that makes performance computing on the edge possible. Arm powers everything from the microprocessors in your phone to the NVIDIA Drive PX.

Our partnerships with Arm and Microsoft are crucial to making the Vision SDK a reality. If you’re at Locate, you can see this all running in a car on the first floor.

For now, the Vision SDK is only available in private beta for select partners. We will be making it publicly available to everyone in September. Sign up now to be the first to get access.